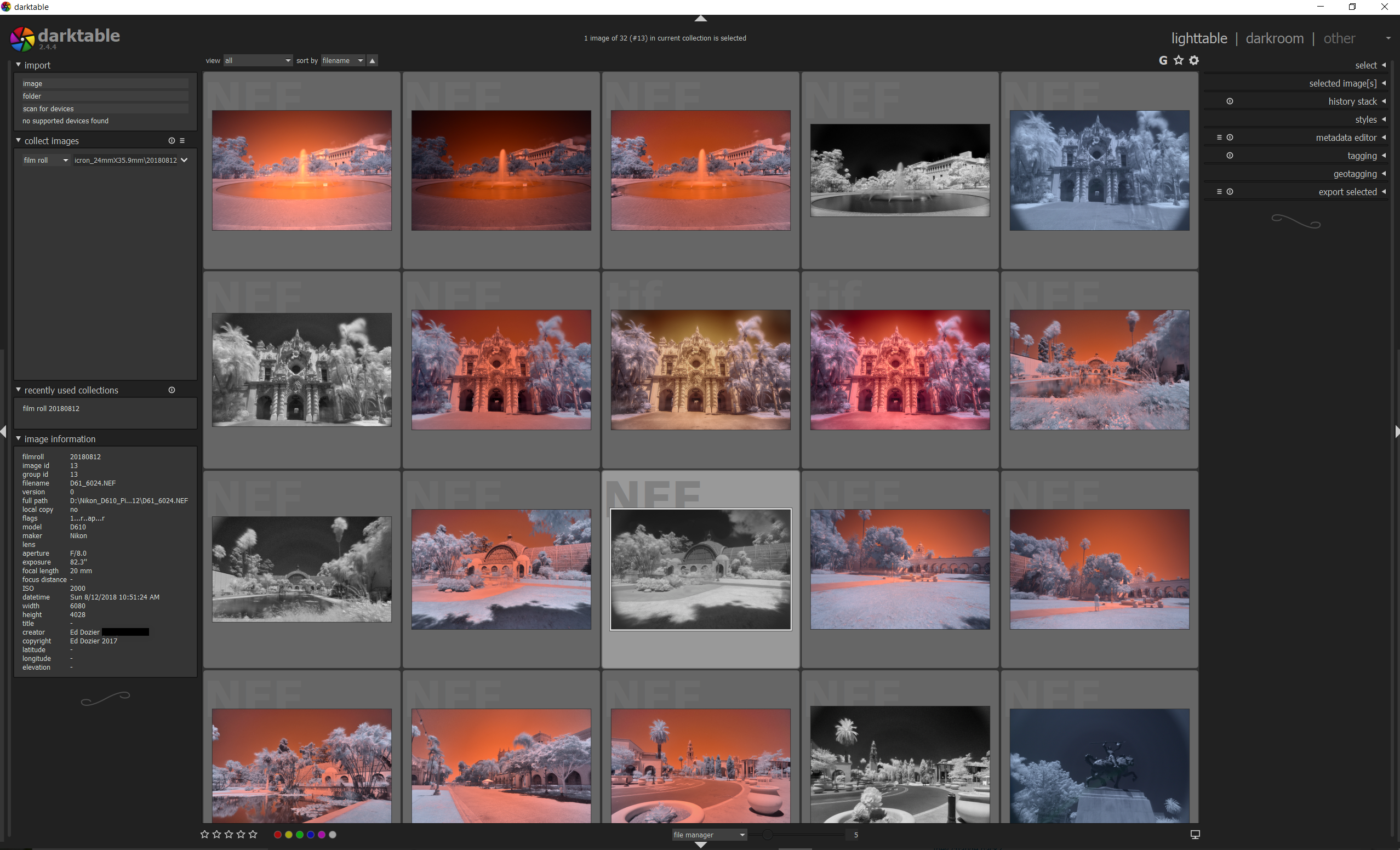

Any interrupts or a stalled data flow will add to the total processing time. To allow for efficient processing with OpenCL it is essential that the GPU is kept busy. Note: The timings given for each individual module are unreliable when running the OpenCL pixelpipe asynchronously (see asyncronous mode below). The most reliable value is the total time spent the in pixelpipe and you should use this to assess your changes. If you want more information about tiling you should use darktable -d opencl -d tiling -d perf.Įach time the pixelpipe is processed (when you change module parameters, zoom, pan, export etc.) you will see (in your terminal session) the total time spent in the pixelpipe and the time spent in each of the OpenCL kernels. In order to obtain profiling information you need to start darktable from a terminal with darktable -d opencl -d perf. Exports are likely to have more consistent and comparable timings between pipe runs than interactive work (and will also push your hardware more). In order to determine how much your modifications improve (or not) darktable’s performance, you will need one or more sample images to test with, and a method of assessing the speed of the pixelpipe.įor sample images, you are advised to use some of the more intensive modules, such as diffuse or sharpen or denoise (profiled).

#DARKTABLE EXPORT MEGAPIXEL QUALITY HOW TO#

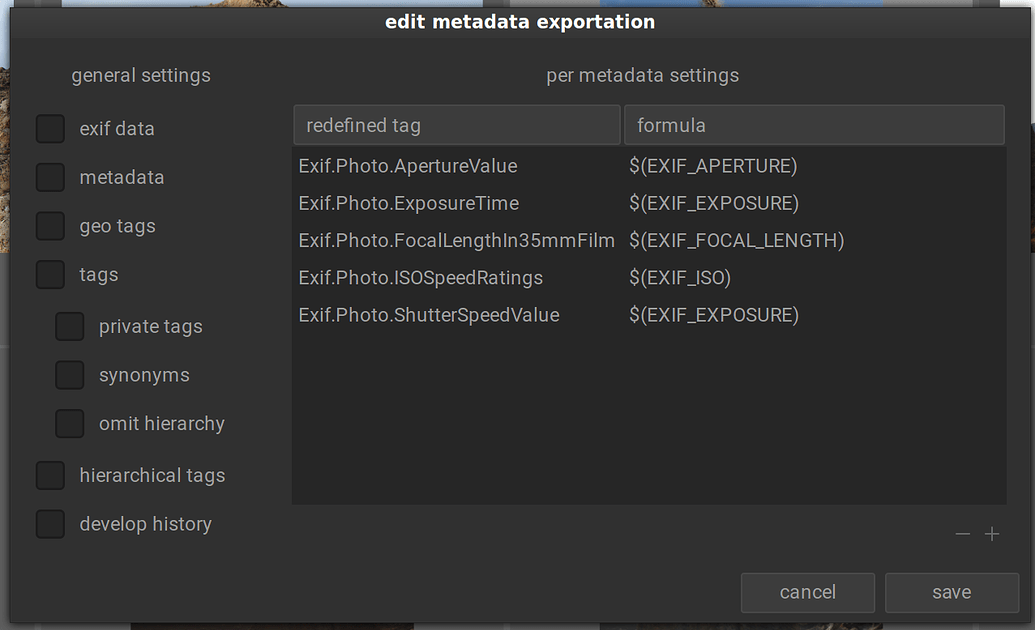

This section provides some guidance on how to adjust these settings. Some of these parameters are available in preferences > processing > cpu/gpu/memory and others need to be modified directly in darktable’s configuration file (found in $HOME/.config/darktable/darktablerc). There are a number of configuration parameters that can help you to fine-tune your system’s performance. For best performance (and avoidance of tiling modes) you should run darktable alongside as few other applications as possible and configure darktable to use as much of your system and GPU memory as you can.

If darktable does not have sufficient memory to process the entire image in one go, modules may choose to use a “tiling strategy”, wherein the image is split into smaller parts (tiles) which are processed independently, and then stitched back together at the end. Similarly, the more GPU memory you have, the better darktable will perform.

#DARKTABLE EXPORT MEGAPIXEL QUALITY FULL#

On top of this is darktable’s code segment, the code and data of any dynamically-linked system libraries, as well as further buffers that darktable uses to store intermediate states (cache) for quick access during interactive work.Īll in all, darktable requires at least 4GB of physical RAM plus 4 to 8GB of additional swap space to run but it will perform better the more memory you have.Īs well as executing on your CPU, many darktable modules also have OpenCL implementations that can take full advantage of the parallel processing offered by your graphics card (GPU). Without further optimization, anything between 600MB and 3GB of memory might be required to store and process image data as the pixelpipe executes.

In order to actually process this image through a given module, darktable needs at least two buffers (input and output) of this size, with more complex modules potentially requiring several additional buffers for intermediate data. A simple calculation makes this clear: For a 20 megapixel image, darktable requires a 4x32-bit floating point cell to store each pixel, meaning that each full image of this size will require approximately 300MB of memory just to store the image data. Processing a Raw image in darktable requires a great deal of system memory.

0 kommentar(er)

0 kommentar(er)